CloudFormation is a great solution for provisioning AWS infrastructure. It’s Infrastructure as Code (IaC), and organisations find it very useful because you can define the entire infrastructure in a declarative manner. This makes it very easy for both Developers as well as SysOps teams to understand and manage the infrastructure going forward.

But when it comes to provisioning Amazon Elastic Kubernetes Service (EKS) Cluster using CloudFormation and bootstrapping it to apply Kubernetes configuration, then we face some interesting challenges.

In this article, we will discuss these challenges in more details. I will explain how my CloudFormation backed serverless solution can help automate provisioning EKS resources (Cluster, Node Group) and apply Kubernetes configuration automatically after the cluster is created.

This article was also published on Medium.

Problem Statement

As per AWS documentation:

When you create an Amazon EKS cluster, the AWS Identity and Access Management (IAM) entity user or role, such as a federated user that creates the cluster, is automatically granted system:masters permissions in the cluster’s role-based access control (RBAC) configuration in the Amazon EKS control plane. This IAM entity doesn’t appear in any visible configuration, so make sure to keep track of which IAM entity originally created the cluster. To grant additional AWS users or roles the ability to interact with your cluster, you must edit the aws-auth ConfigMap within Kubernetes and create a Kubernetes rolebinding or clusterrolebinding with the name of a group that you specify in the aws-auth ConfigMap.

That means, we can’t specify which users/groups to have access to a Cluster during provisioning it. It’s always initialised with the calling identity only. As a next step, we must apply Kubernetes configuration to update the aws-auth ConfigMap. This is achieved by executing a kubectl command.

Here comes our first challenge!

CHALLENGE 1: How to execute kubectl command from CloudFormation?

We need to make CloudFormation invoke the kubectl command execution after the Cluster is created.

CodeBuild

It’s a build platform where we can install kubectl.

Custom Resource

We can deploy a Lambda backed CloudFormation Custom Resource just after the cluster is created. The Custom Resource would make a Lambda function call which can trigger a build in CodeBuild project to initialise the ConfigMap.

But now we have a bigger challenge!

CHALLENGE 2: Identity problem

In enterprise scenarios where infrastructure is provisioned via CloudFormation, the resource provisioning permission is assigned to the CloudFormation Service Role so that end users don’t need to have those permissions.

Hence, when we create an EKS Cluster via CloudFormation, EKS will initialise new Cluster’s RBAC so that only the CloudFormation Service Role have access to it. As a next step, you have to execute a kubectl command on CodeBuild but at this point only the CloudFormation Service Role can do that. Then, how would CodeBuild assume the CloudFormation Service Role?

Service Role with multiple service principals

We can create a common service role with multiple service principals e.g. CloudFormation, CodeBuild etc. In this case, the identity which creates the Cluster is the same identity that executes the kubectl command on CodeBuild.

Solution Overview

EKS-Factory automates provisioning of EKS resources (Cluster, Node Group) and configuring these resources in a self-service manner. As explained above, we use CloudFormation to provision these resources. We also leverage Lambda and CodeBuild to configure the provisioned resources.

All the source code and configuration details, discussed here, are available at the Github repository. Please refer to README.md file for installation instructions.

Solution Architecture

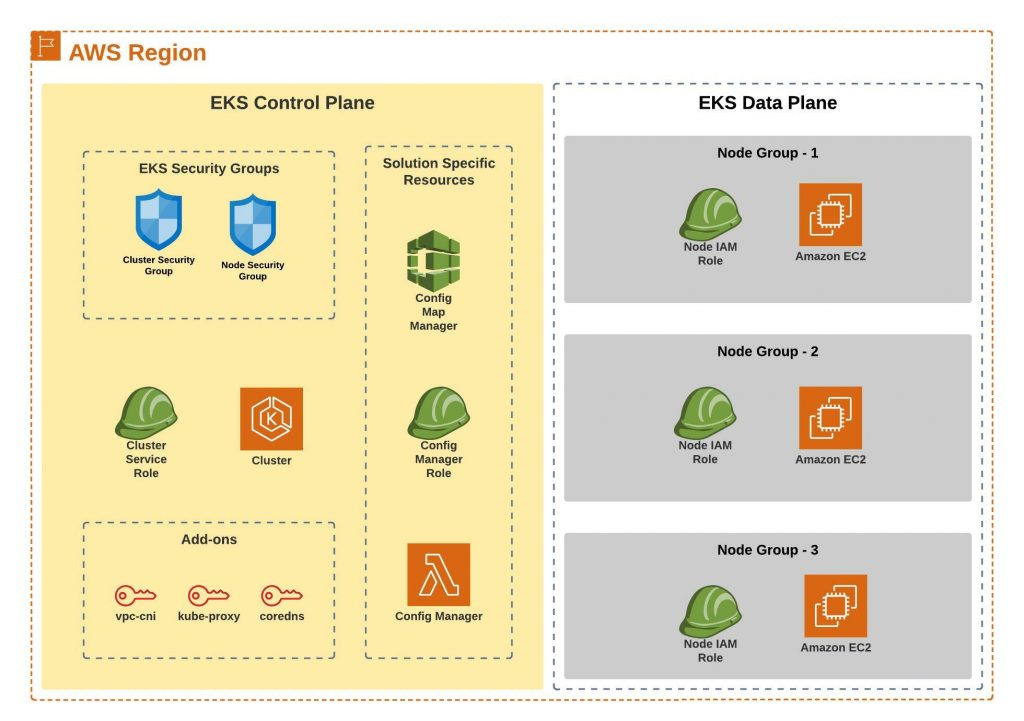

Following is the high level solution architecture diagram showing different components and underlying resources that get provisioned:

When we provision any EKS resources, we will provision it in a logical boundary, we call it as “environment”. There are two different types of stack that we provision for any EKS environment:

STACK 1: EKS Control Plane/Environment

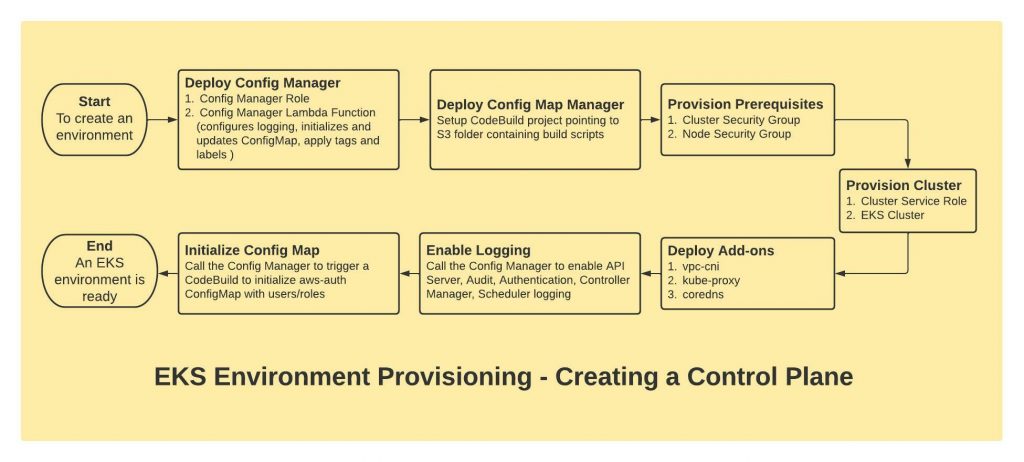

This will be the first stack created for an EKS environment and there will be only one EKS Control Plane stack per environment. This stack deploys the actual EKS Cluster along with other resources. Following is the workflow of different resources being provisioned in this stack. A child/nested stack is created in each of these steps making it easy to maintain the underlying resources.

STEP 1: Deploy Config Manager

First, we deploy a Lambda function, we call it as Config Manager as it performs all the configuration required at different stages of resource provisioning. Before that, we create the IAM Role (Config Manager Role) required for that Lambda function. This Lambda function allows us to provision Lambda backed Custom Resources in CloudFormation which in-turn makes the Lambda function call as and when required to perform required configuration changes.

STEP 2: Deploy Config Map Manager

Next, we create a CodeBuild project, we call it as Config Map Manager as it configures the aws-auth ConfigMap in Kubernetes as and when required. To do that, we start a build in CodeBuild project via the Lambda function call. We store all our script files in S3 and point our CodeBuild project to use the S3 location as repository.

These two components enable us to perform all configuration management tasks as part of the resource provision.

STEP 3: Provision Prerequisites

Here, we create the Cluster Security Group and Node Security Group.

STEP 4: Provision Cluster

Then we create the Cluster Service Role and provision the actual EKS Cluster.

STEP 5: Deploy Add-ons

Add-ons are individual resources in CloudFormation. Here we provision vpc-cni, kube-proxy and coredns add-ons.

STEP 6: Enable Logging

In this step we provision a Lambda backed Custom Resource which in turn makes a call to the Config Manager (Lambda function) and enables API Server, Audit, Authentication, Controller Manager and Scheduler logging.

STEP 7: Initialise Config Map

In this step we provision another Custom Resource which calls the Config Manager Lambda function. But this time, the Lambda function triggers a build in CodeBuild where we, on the fly, execute a kubectl command to update the aws-auth ConfigMap in Kubernetes with right privileges for standard users and groups in your organisation.

This completes provisioning a new EKS Cluster with all required configuration applied.

STEP 0: Wait, what?

Actually we need something very important before performing all these steps above. It is the right time to explain that now.

We need a Service Role with multiple service principals as shown in the following CloudFormation template snippet:

Resources:

EKSFactoryServiceRole:

Type: AWS::IAM::Role

Properties:

RoleName: !Sub EKSFactory-${AWS::Region}-ServiceRole

Description: EKS Factory Service Role used by different services e.g. Service Catalog, CloudFormation, and CodeBuild for managing EKS environments.

AssumeRolePolicyDocument:

Statement:

- Action: sts:AssumeRole

Effect: Allow

Principal:

Service:

- cloudformation.amazonaws.com

- codebuild.amazonaws.com

- servicecatalog.amazonaws.com

ManagedPolicyArns:

- arn:aws:iam::aws:policy/AdministratorAccessWe will create this role as part of the solution deployment which is discussed later. We need this role before provisioning anything because CloudFormation, CodeBuild and Service Catalog (which you will see later) services will use the same role.

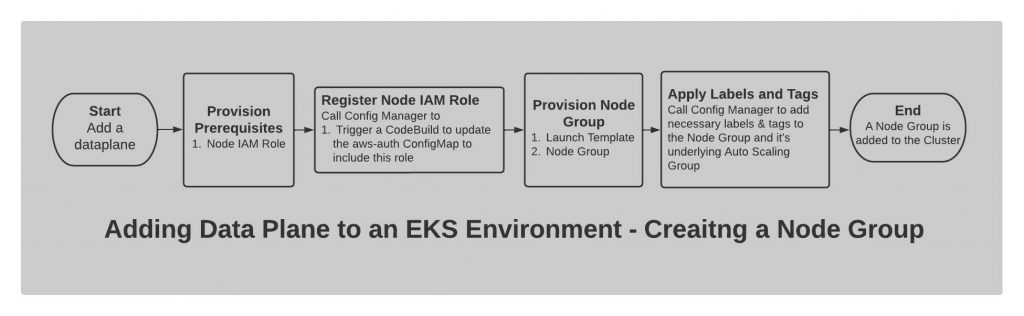

STACK 2. EKS Data Plane/Node Group

Once an EKS environment is created and the Cluster is provisioned, then we can provision one/more Data Plane (Node Group) using this stack. Following is the workflow of different resources being provisioned in this stack. As before, a child stack is created in each of these steps.

STEP 1: Provision Prerequisites

First, we create a Node IAM Role required for the Node Group.

STEP 2: Register Node IAM Role

Then, we deploy a Custom Resource which make a call to the Config Manager (Lambda function) and triggers a build in CodeBuild to execute a kubectl command to add the Node IAM Role in the aws-auth ConfigMap in Kubernetes. This is required for the nodes to be able to join the cluster.

STEP 3: Provision Node Group

The EKS Node Group is provisioned in this step. But before that, it creates a Launch Template based on which the Node Group is provisioned.

STEP 4: Apply Labels and Tags

We deploy a Custom Resource at this stage that makes a call to the Config Manager (Lambda function) to add any additional Kubernetes labels to the Node Group and the tags to the underlying Auto-Scaling Group (ASG). The tags added at this stage to the ASG do not propagate to the already launched EC2 instances under the ASG, hence the Lambda function also adds the tags to directly to those existing instances as well.

Finally, we get a new Node Group provisioned with the underlying nodes joined the EKS Cluster.

Making it self-service

At this point, we are good in terms of provisioning Control Plane and Data Plane and all the underlying resources with all the automation in place as long as we have the CloudFormation template files available with us. Now, if we package them as Service Catalog Products then end users can consume them in a self-service manner.

Solution Deployment

The solution is available in the Github and can be deployed in one-click by executing install.sh as described in the README.md file. It creates a Service Catalog Portfolio called EKS Factory and deploys the EKS Control Plane and EKS Data Plane as two separate products. The solution also creates the EKSFactoryServiceRole (as discussed before) with multiple service principals so that other services (CloudFormation, CodeBuild and Service Catalog) can use the same role.

Note: If we deploy the solution in Service Catalog, technically we don’t need the CloudFromation service principal in the EKSFactoryServiceRole. But I kept it in my source code just in case you don’t use Service Catalog and provision all the resources directly via CloudFormation.

Key Consideration before deployment

Update the aws-auth.yml.template based on your requirement. You need to add IAM users/roles that will have access to the provisioned clusters. Typically, these are the standard users/roles such as system administrators, audit users who would need to have access to all the resources. You can define different permission for different users/roles as shown below:

apiVersion: v1

kind: ConfigMap

metadata:

name: aws-auth

namespace: kube-system

data:

mapRoles: |

- rolearn: arn:aws:iam::AWSAccountID:role/EKS-Admin

username: EKS-Admin

groups:

- system:masters

- rolearn: arn:aws:iam::AWSAccountID:role/EKS-Users

username: EKS-Users

mapUsers: |

- userarn: arn:aws:iam::AWSAccountID:user/Audit-User

username: audit-user

groups:

- reporting:readKey Features of the Solution

1. Self-service

The entire infrastructure can be provisioned, upgraded and decommissioned in a self-service manner with no manual intervention at the backend. This brings accuracy and agility.

2. IaC

Infrastructure is provisioned using declarative CloudFormation templates, and “CloudFormation-first” approach. This makes it very easy for both developers as well as SysAdmins to maintain the environment going forward.

You can provision a whole infrastructure (an EKS Cluster with two Node Groups) using a simple yaml template like this:

AWSTemplateFormatVersion: 2010-09-09

Description: Template to create an EKS Cluster and two Node Groups.

Resources:

Cluster:

Type: AWS::ServiceCatalog::CloudFormationProvisionedProduct

Properties:

ProductName: EKS Control Plane

ProvisionedProductName: my-prod-EKS-control-plane

ProvisioningArtifactName: New Cluster

ProvisioningParameters:

- Key: EnvironmentName

Value: my-prod

- Key: KubernetesVersion

Value: "1.21"

- Key: KmsKeyArn

Value: <YOUR KMS KEY ARN>

- Key: VpcId

Value: <YOUR VPC ID>

- Key: Subnets

Value: "<YOUR SUBNET IDs SEPARATED BY COMMAS>"

- Key: VpcCniVersion

Value: v1.10.1-eksbuild.1

- Key: CoreDnsVersion

Value: v1.8.4-eksbuild.1

- Key: KubeProxyVersion

Value: v1.21.2-eksbuild.2

NodeGroup1:

Type: AWS::ServiceCatalog::CloudFormationProvisionedProduct

Properties:

ProductName: EKS Data Plane

ProvisionedProductName: my-prod-EKS-121-141-NodeGroup-1

ProvisioningArtifactName: New Node Group

ProvisioningParameters:

- Key: EnvironmentName

Value: my-prod

- Key: KubernetesVersionNumber

Value: 121

- Key: BuildNumber

Value: 141

- Key: NodeGroupName

Value: NodeGroup-1

- Key: AmiId

Value: <YOUR AMI ID>

- Key: CapacityType

Value: ON_DEMAND

- Key: InstanceType

Value: m5.2xlarge

- Key: VolumeSize

Value: 60

- Key: VolumeType

Value: gp3

- Key: MinSize

Value: 1

- Key: MaxSize

Value: 3

- Key: DesiredSize

Value: 3

- Key: Subnets

Value: "<YOUR SUBNET IDs SEPARATED BY COMMAS>"

- Key: EnableCpuCfsQuota

Value: "true"

- Key: HttpTokenState

Value: optional

DependsOn:

- Cluster

NodeGroup2:

Type: AWS::ServiceCatalog::CloudFormationProvisionedProduct

Properties:

ProductName: EKS Data Plane

ProvisionedProductName: my-prod-EKS-121-141-NodeGroup-2

ProvisioningArtifactName: New Node Group

ProvisioningParameters:

- Key: EnvironmentName

Value: my-prod

- Key: KubernetesVersionNumber

Value: 121

- Key: BuildNumber

Value: 141

- Key: NodeGroupName

Value: NodeGroup-2

- Key: AmiId

Value: <YOUR AMI ID>

- Key: CapacityType

Value: ON_DEMAND

- Key: InstanceType

Value: m5.2xlarge

- Key: KeyPair

Value: <YOUR KEY PAIR NAME>

- Key: VolumeSize

Value: 60

- Key: VolumeType

Value: gp3

- Key: MinSize

Value: 1

- Key: MaxSize

Value: 3

- Key: DesiredSize

Value: 3

- Key: Subnets

Value: "<YOUR SUBNET IDs SEPARATED BY COMMAS>"

- Key: EnableCpuCfsQuota

Value: "true"

- Key: HttpTokenState

Value: optional

- Key: TaintKey

Value: <YOUR TAINT KEY>

- Key: TaintValue

Value: <YOUR TAINT VALUE>

- Key: TaintEffect

Value: <YOUR TAINT EFFECT>

- Key: AdditionalLabels

Value: "label1=value1,label2=value2"

- Key: AdditionalTags

Value: "tag1=value1,tag2=value2"

DependsOn:

- Cluster3. Integrated Build Platform

CodeBuild enables us to perform any kind of (pre, post deployment) configuration and/or deploy workloads into the environment.

4. Integrated Business Logic Layer

Lambda function enables us to write any custom component/logic and integrate features in a plug-and-play manner.

5. Serverless

The solution is completely serverless which is highly available, scalable and cost effective approach compared to any traditional model where we might need dedicated servers to run the different components such as Jenkins.

6. Cloud-native

The solution doesn’t use any third tools. This is good for organisations using AWS and prefer cloud-native solutions.

7. Flexible

The solution is flexible, components can be updated separately to add new features. For example, we can use it to deploy Cluster Autoscaler or applications such as monitoring tools post provisioning the cluster. All we have to do is prepare the steps in a shell script file and invoke a build with the script.

Default behaviour of the Solution

a. Separate Node IAM Role for each Node Group

We are creating a separate Node IAM Role for each Node Group. This is based on the assumption that there could be requirement for different Node Groups to have different permissions. In that case, you can modify the Node IAM Roles post provisioning the Data Plane (Node Group).

b. Default labels and tags

We are adding environmentName both as a Kubernetes label and EC2 tag to the underlying nodes. But you can also provide any no. of additional labels and tags per Node Group while provisioning them.

c. Zero/One Taint per Node Group

The Node Group template eks-node-group.yaml allows zero or one Taint to be applied to the underlying nodes. If you want more Taints to be applied then we can easily extend the solution to implement that similar to how it’s done for labels and tags.

Taking the Solution to next level

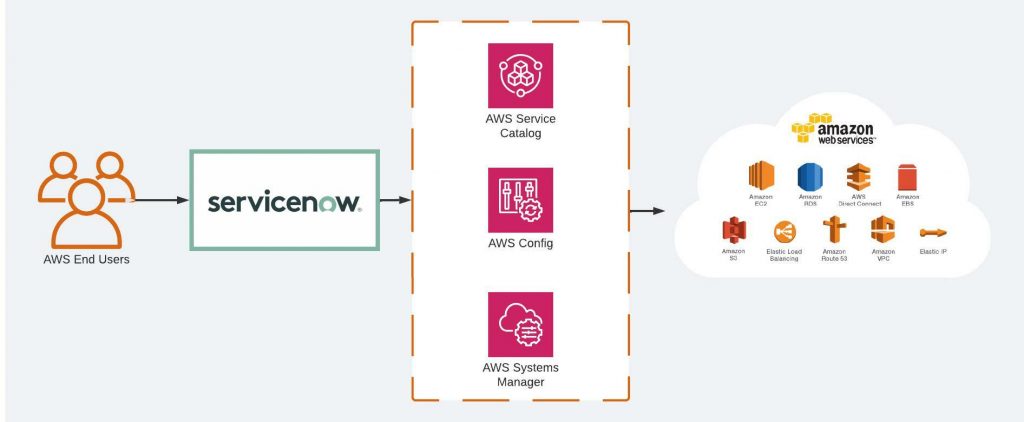

Many organisations use ServiceNow for their ITIL process. We can enable end users to request EKS resources (Cluster, Node Group) via ServiceNow.

This is achieved by using AWS Service Management Connector for ServiceNow (formerly the AWS Service Catalog Connector). The connector allows ServiceNow end users to provision, manage, and operate AWS resources natively through ServiceNow.

How did you find this solution? Feel free to share your thoughts in the comments below.

![OCI 2019 Certified Architect Associate: Exam Guide [2020]](https://urbancodex.com/wp-content/uploads/oci-2019-certified-architect-associate-300x300.png)

![Alibaba Cloud Associate Cloud Security: Exam Guide [2020]](https://urbancodex.com/wp-content/uploads/alibaba-cloud-associate-cloud-security-certification-300x200.png)